Author's notes...

Several years ago I added to the Orthogonal.Common.Basic library a utility class that scanned the file-system for duplicate files. That class became outdated due to language and Framework advances and was removed from the library in July 2018. Since I often have to run duplicate file scans, I wrote a fresh duplicate scan library that was more flexible and more efficient thanks to the use of parallelism.

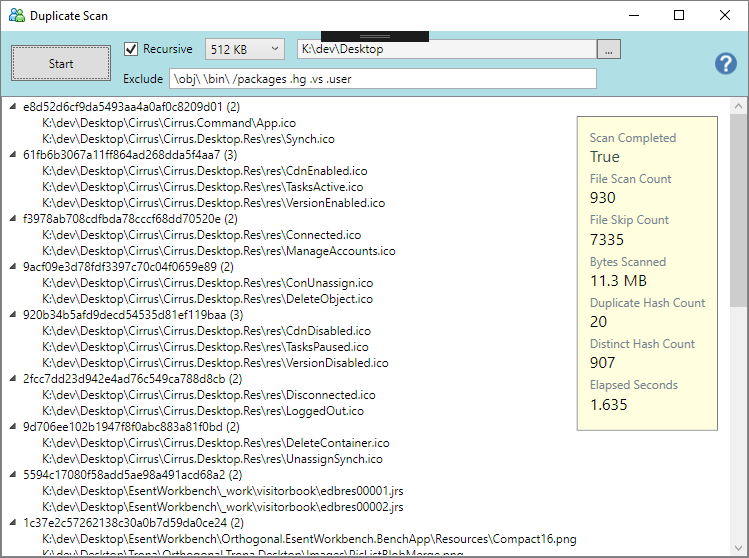

The new library is published in this repository in case it may be of use to other developers. Contained in the Visual Studio 2017 solution are two other projects which wrap around the library to expose its functionality, a DOS command and a WPF Windows program.

The Windows program has some useful features like being able to delete a duplicate file in the results tree. You can also right-click the results and convert them to text or xml files.

I haven't run a search, but I'll bet there are lots of public utilities already available to scan for duplicate files. I don't mind though, as my projects were a good technical exercise, and they do exactly what I need.

Technical Notes

The first version of the library used the CRC64 checksum algorithm to hash file contents for comparison, but the code had to be pasted in from another project because it's not provided in the standard .NET Framework. I then switched over to using MD5 which is built-in to the cryptography classes. I was afraid MD5 might be a bit slower, so I ran some timing test to hash about 1TB of photo files using both algorithms and I found that MD5 is fractionally faster than CRC64. This quite surprised me, as MD5 is a more complex algorithm. For comparison I then hashed 2GB of byte buffers in memory in a loop and found that MD5 is about 40% slower than CRC64. This hints that the authors of the MD5 code are processing file streams more efficiently. Since this library processes files, the marginal difference in speed leads me to prefer MD5 because it's built-in.

Some might argue that the 128-bit hash of MD5 is superior to the CRC 64-bit hash because there is less chance of collisions. This is true, however, the table in this Birthday Attack article reveals that you would have to hash millions of files before the probability of a 64-bit collision rises to 0.000001. It's best to be safe anyway, and since the performance varies only slightly when hashing files, I decided once again to stick with MD5.

Sample

:

var scanparams = new DupScanParameters

{

ScanFolder = new DirectoryInfo(@"D:\Sample\Projects"),

HashKB = 128,

Recursive = true,

ExcludeFullNameContains = new string[]

{

".tmp", ".temp", "/bin/", "/obj/", ".hg"

}

};

var scanner = new DupScanner(new Progress<DupScanProgress>(DupCallback));

scanner.Start(scanparams);

:

void DupCallback(DupScanProgress progress)

{

switch (progress.Reason)

{

case ProgressReason.Started:

Trace("Scan has started");

break;

case ProgressReason.Progress:

Trace($"count={progress.ScanCount} file={progress.Filename}");

break;

case ProgressReason.Completed:

Trace("result={progress.Result}");

break;

}

}

WPF Windows Program

DOS Command Help

OVERVIEW

A command line utility over the duplicate scan library.

SYNTAX

dupscan {folder} [ /s /maxkb:{kb} /x:{exclude} ... ]

PARAMETERS

{folder} .......... The folder to scan.

/s ................ Recursive scan though sub-folders.

{kb} .............. The maximum number of leading kilobytes of a file to scan.

{exclude} ......... A case-insensitive string that will cause a file to be skipped

if any part of its full name contains the string.

EXAMPLE

dupcan C:\TestData\Photos /s /maxkb:128 /x:.tmp /x:\junk\ /x:.ini